Regression Testing in the Microservices Age

In this white paper, we explain how testing in a microservices environment is dramatically different than in a monolithic application.

These differences create five major challenges for teams maintaining high-velocity microservices apps:

- High complexity with low visibility

- Complex and unpredictable rollback of changes

- Limited visibility across the organization for changes made to individual components

- Very long run time for full regression across all microservices

- Decreasing marginal benefit of new tests

We will show how Quality Intelligence Platforms can mitigate these challenges, create visibility and avoid slowing down development with unplanned, ineffective, or slow running tests.

Quick Definition: What is a Microservices Architecture?

We all know what microservices are, but different people have different definitions. When we say microservices, we mean an application broken up into autonomous components.

Microservices can communicate via REST APIs, event queues, common files, or shared database records. The mechanism for communication between microservices can have a major impact on quality:

- If REST is used, the architecture is more susceptible to integration problems. For example, a change to the API in one microservice could break functionality on another service.

- If communication is based on events, common files or database records, microservices are less dependent on each other, making the integration more robust.

Each microservice will typically have its own development team, a separate build, and a test pipeline including one or more of the following test types:

- Unit tests

- Functional tests

- End-to-end/integration tests

- Regression tests

- Manual tests

- Exploratory tests

Most applications based on a microservices architecture have between dozens to hundreds of services. This means that organizations moving from a monolithic to a microservices infrastructure go from one build and one test pipeline (however large) to hundreds of builds and multiple test pipelines.

How is Microservices Testing Different from Monolithic App Testing?

The following table identifies some essential elements of testing in old monolithic architectures, and can be more difficult or more complex in a microservices architecture.

| Monolithic Architecture | Microservices Architecture |

|---|---|

| One dev/test/deploy pipeline | Dozens/hundreds of pipelines (one per microservice) |

| One build, or several builds for major components | Dozens/hundreds of builds (typically one per microservice) |

| One release for the entire application | Dozens/hundreds of individual releases for individual microservices (every build is a release candidate) |

| Commits/builds once every few days or weeks (in agile environment) | Commits/builds many times a day for different microservices |

| Problems with a release break the build/break production, possible to roll back | Multiple microservices with bugs might be deployed to production and cause small “fires”, no easy rollback |

| Interdependencies between components or services in the application | Microservices are loosely coupled, with minimal to no dependencies |

5 Reasons Microservices Testing is More Difficult than Monolithic App Testing

You write a new microservice, you write some unit tests, and deploy it to production. What happens next is not entirely clear. The following are five reasons microservices testing is very different, and much more difficult, than traditional application testing.

1. Dozens of Dev Pipelines—Huge Complexity with No Visibility

In a monolithic application there is one dev/test/deploy pipeline, and one release candidate build, or a series of builds, per release. When moving to a microservices environment, there can be 20, or even 200, different development pipelines, each with its own build and release candidates, each pulled into the testing pipeline for verification before it is promoted.

Each microservice has its own tests. Even a small component can have thousands of tests. The first challenge is there is no easy way to visualize which types of tests, and how many tests exist across all microservices.

Each microservice has its own level of test coverage. For simple unit tests, you can track coverage using tools like JaCoCo. However, as the application grows, it becomes impossible to track coverage across all components. You can run JaCoCo or similar tools for each microservice, but you need to work hard in order to aggregate this information and measure coverage for the entire application.

No way to see test coverage for non-unit tests. What about functional, integration, acceptance tests, API tests, security tests, performance tests, and so on? Visualizing the coverage of these more complex tests is difficult for even one component. Not to mention the entire microservices application.

In the old world, the mythical “QA Department” would look at a system, check what has changed recently, decide which new tests are needed, and write manual test scripts or automated tests to ensure those changes are tested. In the new world, there is no QA department consistently performing that analysis.

In a microservices environment, no one really has that level of visibility. No one is able to tell management that the application is sufficiently tested, and if not, which types of tests need to be added.

2. No Way to Roll Back

In the old world, there was one big release, with a long list of changes and new features. A major problem would break the build, or break the production environment. It was painful, but it was clear there was a problem and teams were able to roll back to the latest stable release.

In a microservices environment, this is no longer the case. There are numerous code commits and production deployments every day, each time for a different microservice. If the latest build for a specific microservice was problematic, it typically won’t break production. But it may start to cause small “fires” in production, and it won’t always be clear which service is responsible.

Pushing microservices to production can cause small fires, which can add up to a big fire. There is no clear way to tell which microservice caused the problem, and no easy rollback to a stable release.

3. No Clear Responsibility for Integration Tests Across Microservices

When developing a monolithic application in an agile environment, it is clear who is responsible for testing. Development teams are typically responsible for unit tests, and some integration tests. Testing specialists or QA teams are responsible for broader integration testing or acceptance tests.

In a monolithic app, when a new component is introduced or modified, it is clear who is responsible for testing that component, and considering its interaction with other components, to ensure nothing breaks.

In a microservices app, these boundaries break down. There are different teams developing different microservices. They know they need to develop tests for their own microservice, and hopefully, they do.

But it is unclear who is responsible for:

- Testing a complex component, comprised of several microservices, as one unit

- Building integration tests that look at multiple components together

- Testing the entire microservices app as a whole

In most organizations, there is no clear line of responsibility for tests that span multiple microservices. As a result, these broader tests may be neglected, ignored, or forgotten.

When someone commits new code, often no one looks holistically at the impact of the change. How will it affect other microservices, the application component this microservice belongs to, or the application as a whole?

Typically the microservice team will build some unit tests or other isolated tests, to ensure their service is working properly. They may also run the existing integration tests and make sure their component doesn’t break anything. But existing tests may not test the new microservice, or its modifications, at all. They may also not detect any side effects these changes are causing in other microservices.

A microservices environment leads to a narrow focus on component testing, which is not enough to test the real impact of the change across the application.

4. Full Regression is Slow, Difficult, Even Impossible

Multiple teams writing microservices typically create more and more tests as time goes by. For large applications, the number of tests can quickly explode.

The other side of the coin is that microservices-based applications change very frequently. Each microservice has its own updates and releases, and code commits can be deployed to production multiple times per day. Teams face a dilemma: when each of these changes occur, what should they test?

Option 1: Run a full regression for each change. This is the conservative approach. But as the application grows a full regression can take hours or even days to run. And in addition, just running all the existing tests doesn’t guarantee catching all issues. Teams must carefully consider how the new code interacts with the rest of the system. It is covered by the existing regression tests, or are new regression tests needed?

Option 2: Run localized tests and hope for the best. This is the optimistic approach. When a component changes, teams run its unit tests, run integration tests directly related to that component, and deploy. It is understood that this leaves the application at risk of new production issues.

None of these options is a good solution, and the result is a testing process that is either slow and unwieldy (which hurts development productivity and velocity), or very partial (creating quality risks with every code change).

The biggest problem is not knowing how the current change interacts with the rest of the system, and not knowing if it is covered by tests. If teams were able to focus their efforts on untested changes, they could avoid a full regression, while still preventing quality problems.

5. Adding More Tests Might Not Improve Quality

Many development teams have the concept of a “quality release”. Every once in a while, when they experience too many production defects, they stop the development of new features and dedicate an entire sprint to bug fixes and tests. The goal is to improve quality quickly.

In our experience, “quality releases” not only fail to improve product quality, they could even do the opposite.

When developers fix bugs and write tests without careful planning, they often write tests that:

- Have already been written in the past

- Overlap with existing tests

- Cover legacy code that has been proven and tested in production for years

- Cover cold code that is very rarely used by actual users

- Cover dead code that is never even executed in production

Redundant tests waste a lot of time. But worse—they require ongoing maintenance, and this slows down the speed of builds and regressions.

Development teams make the move to microservices in order to move faster in development. But they end up creating huge test suites that cause them to move slower and slower. A monstrous test suite can stop development dead in its tracks.

There are additional drawbacks of so-called “quality releases”:

Bug fixes may create regressions, and they may not be covered by any tests. So fixing bugs may actually introduce new quality issues.

Adding tests is only a temporary fix, because future sprints again generate new code changes, which again are not adequately tested.

The bottom line is that more tests don’t necessarily improve quality. In many cases they may actually make quality worse and slow down the development cycle.

How Quality Intelligence Can Help

Quality Intelligence Platforms are a new category of development tools, which provide developers, testers and R&D management rich data about the quality of a software product. Quality intelligence tells you which product features are tested, and whether the tests are adequate to prevent quality issues.

SeaLights is an example of a Quality Intelligence Platform that was built for microservices environments. It provides the visibility and insights you need to solve the microservices testing challenge.

See Test Suites and Coverage Across all Microservices

SeaLights starts by providing basic visibility into which tests exist for each microservice. You can see a list of components in your application, expand each one and see all the test suites created for it.

For example, this Java agent has component tests and over 700 unit tests.

SeaLights provides holistic test coverage. This measures what proportion of application code is covered by the tests. It doesn’t only do this for unit tests, but also for functional, integration, end-to-end, even manual and exploratory tests. It covers these additional test types by deeply analyzing application structure and code changes, and understanding how the tests interact with the application.

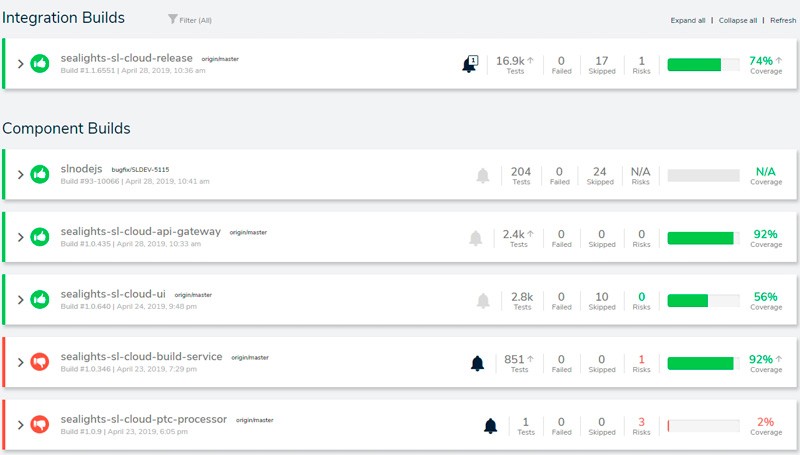

Below are several components and their aggregate, holistic test coverage. The “api-gateway” microservice has 92% coverage, while “cloud-ui” has only 56%. It’s worth looking into that component and checking which functionality is currently untested, and thus which tests are missing.

SeaLights shows you quality risks. Quality risks are recent code changes that have not been tested. For each microservice, and each file within the microservice, SeaLights can show the number of quality risks. SeaLights lets you drill down into the code itself to see who changed the code and which specific functions, branches, or lines of code are untested.

SeaLights creates a quality gate for each component. This is a series of criteria which define when the component can be promoted in the development pipeline, and released to production. The quality gate defines conditions that break the build if individual components or interactions between components have quality risks (untested changes or failed tests).

Quality gates provide an unprecedented level of control over microservices, as they are deployed to production, preventing small production “fires” which can add up to a big fire in your microservices ap.

Create an Integration Build to Manage Broad Cross-Application Tests

Beyond individual components, SeaLights lets you define an integration build. This is a larger structure that includes multiple microservices, multiple components, or the entire microservices application.

Just like SeaLights checks for quality risks at the individual component level, it does so at the integration build level. It answers questions like:

- Do integration points cover all new changes in the latest build?

- What happened to test coverage compared to previous builds?

SeaLights shows test coverage for the entire integration build. This indicates which broad application changes are not covered by your end-to-end tests. For example, below you can see an integration build which has overall test coverage of 75%. Below you can see test coverage of different test stages, like API tests, sanity integrations and Selenium tests.

SeaLights lets you drill down into each of the components, to get a breakdown of coverage and risks for each component.

SeaLights lets you compare each build to previous builds, and most importantly the last production build (indicated by a flag). You can drill down to each build to see which components changed, which quality risks were created in the build, and whether they are adequately covered by tests.

SeaLights creates an automatic quality gate for the integration build as it does for individual components. The quality gate defines conditions that break the build if individual components, or interactions between components, have quality risks (untested changes, or failed tests).

SeaLights provides a wealth of data, which helps you quickly decide if a build is ready for release or not. This data is ordinarily not available to development teams at all, especially in a microservices environment.

Understand Tests Along Three Dimensions: Coverage, Change, Usage

SeaLights provides the data you need to understand test efficiency—how your tests actually impact quality.

When you add additional tests, how do you know those tests are actually needed? SeaLights gathers data across three dimensions to help you understand the most critical gaps in your test coverage, and where you should focus your tests.

Dimension 1: Test Coverage

Which tests exist for each individual microservice, and for the integration build as a whole? Some parts of the application might be extensively tested while others are not tested at all.

Dimension 2: Code Changes

With so many updates entering production, it’s critical to know what your tests are covering. SeaLights lets you look at the latest build, the last few builds, even all the developments in the past 6 or 12 months, and which changes are not covered by tests. If a change was never tested or wasn’t tested recently, you don’t know what trouble it might cause in production.

Dimension 3: Real Usage

How are components and features used in production? In the table below, the “Used” column shows how many methods are actually executed in production by the application’s users. Methods that have been modified, and are also actively used, represent the largest quality risk.

Defining Which Tests Should be Included in a Partial Regression Run

SeaLights provides a Test Impact Analytics report, showing which tests are actually impacted by a given change in a build. Test Impact Analytics lets you identify which tests are needed to cover changes in the latest build and run only those tests, instead of the entire regression suite.

You may still need to run a full regression suite, but not necessarily for every build. You can run a partial regression suite for each build based on the SeaLights test impact list. Then, in the last testing cycle of the sprint, run a full regression suite. That will save a lot of time.

Once you run only the test being impacted by the changes, you should also check for quality risks, making sure that all code changes are covered by existing tests. In case you still some quality risks, it means you need to add additional tests to cover those risks. Run the tests, and in the next cycle, SeaLights will already consider those new tests in the test impact list.

Tracking Improvement Across Teams

The last part of the puzzle is tracking progress. SeaLights gives you a comprehensive picture of your quality risks and test coverage, but that’s not enough—you need to improve over time, by adding tests to cover more unchecked code changes. Every new test that addresses a code change reduces your quality risk.

Across teams, SeaLights makes it clear who is pulling their share of maintenance work and who is not. For example, the graph below shows that for a particular microservice, holistic test coverage across all test types was 64% in December, but down to only 31% in January.

What happened here? Very likely, the team introduced many new code changes but did not build enough tests to cover those changes. This is a clear indication that this team is falling behind in quality and creating technical debt.

SeaLights shows, for each component or team:

- A quality trend—are test coverage and quality risks improving or getting worse over time?

- Build quality—how many of the component’s builds are failing or experiencing serious warnings?

- Problem areas—you can see which type of tests is the source of the problem. For example, Selenium tests have not been updated, or the team is not creating unit tests.

Microservices Let You Move Fast; Tests Should Follow

Too often, microservices applications face an exponential increase in tests, which do not manage to stop fires in production. With the complexity of the microservices environment, and fast pace of development, it is difficult to test comprehensively.

However, we have shown that with the help of a Quality Intelligence Platform like SeaLights, you can regain control of your microservices app, catch quality risks and mitigate them with a focused maintenance effort.

SeaLights helps you make tests agile—fast moving, effective and quick to adapt to new requirements.

See SeaLights in action – request a live demo of the platform by filling out the form below.