Writing tests is a necessity. A necessity, that many would prefer to live without.

For example, I think that many developers perceive writing tests as wasteful, time-consuming, redundant, and boring, and even though most of them understand the importance of these tests, they just hope it will not be on their plate.

Being unable to determine what exactly to test or how exactly to test it, drive developers to either test randomly or test excessively, with both methods driving developers to the negative feelings described above.

For QA engineers, writing tests is a key job requirement, one on which a big portion of their time is spent, but they are facing similar conflicts. On one hand, they don’t want to release unstable software to production and on the other, they don’t want to be perceived responsible for delays with the release. This lead to growing frustration, since the pressure to increase release velocity, collides with the inability to get an accurate reading of each test’s value or ROI.

Now, what if I’ll tell you that the days of writing unneeded tests are over? That there is a solution that can guide you to write tests only in the areas that really needs them the most?

Well, the solution exists within quality intelligence technology, and we refer to it as test gap analytics.

First, let’s define a Test-Gap. A Test-Gap is a code area that was not tested, either in a certain build or in a certain time period. Let’s consider for now a method as the atomic level for this code area. It is important to distinguish between four types of test-gaps:

- An untested method that was recently modified & In use in production

- An untested method that was recently modified & Not used in the production

- An untested method that wasn’t modified for months & In use in production

- An untested method that wasn’t modified for months & Not used in the production

I think you will all agree that this Test-Gap list is sorted by severity, as addressing a Test-Gap from type 1 is far more important than #3 or #4…I would even say that Test-Gap type #4 is something that you shouldn’t waste time on, and Test-Gap type #3 is only important if your goal is to create solid regression tests that cover all the main user-flows (as the code was actually tested by the end users). Test-Gap type #4 might indicate you have dead code in the system, and maybe some cleansing is required.

So with that approach in mind, and with SeaLights ability to analyze multiple data sources:

- Builds – what happened from one build to the other, who did what and when.

- Tests Coverage- what did each test type/stage cover in the application under test

- Production- Where your real users are “touching” in the application deployed in production

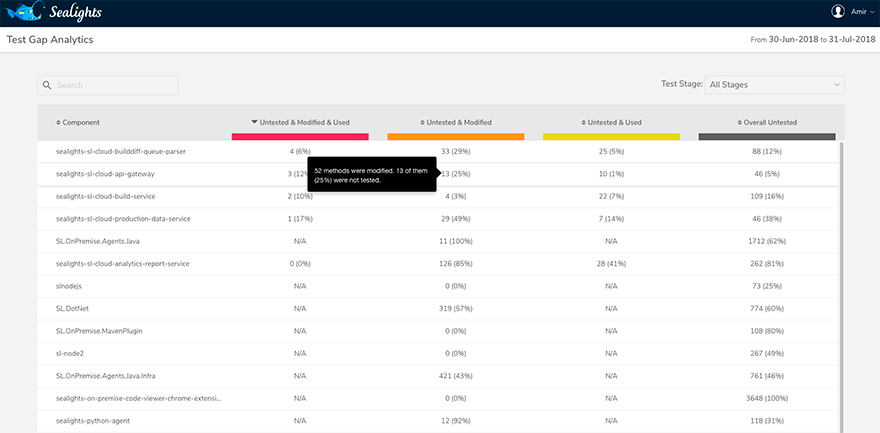

Here is an example of Test Gap Analytics as it is used by our customers:

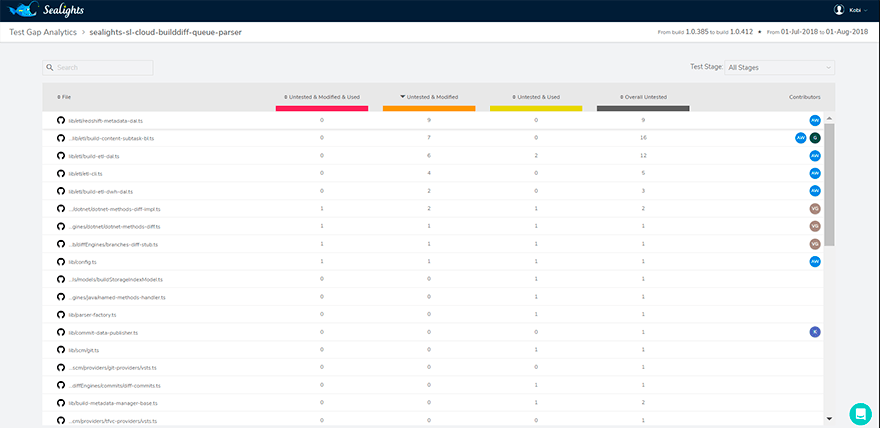

When the user wants to get more information on a specific component, he clicks on the component to see a list of the files that contain these test gaps as well as the developers that committed the new code:

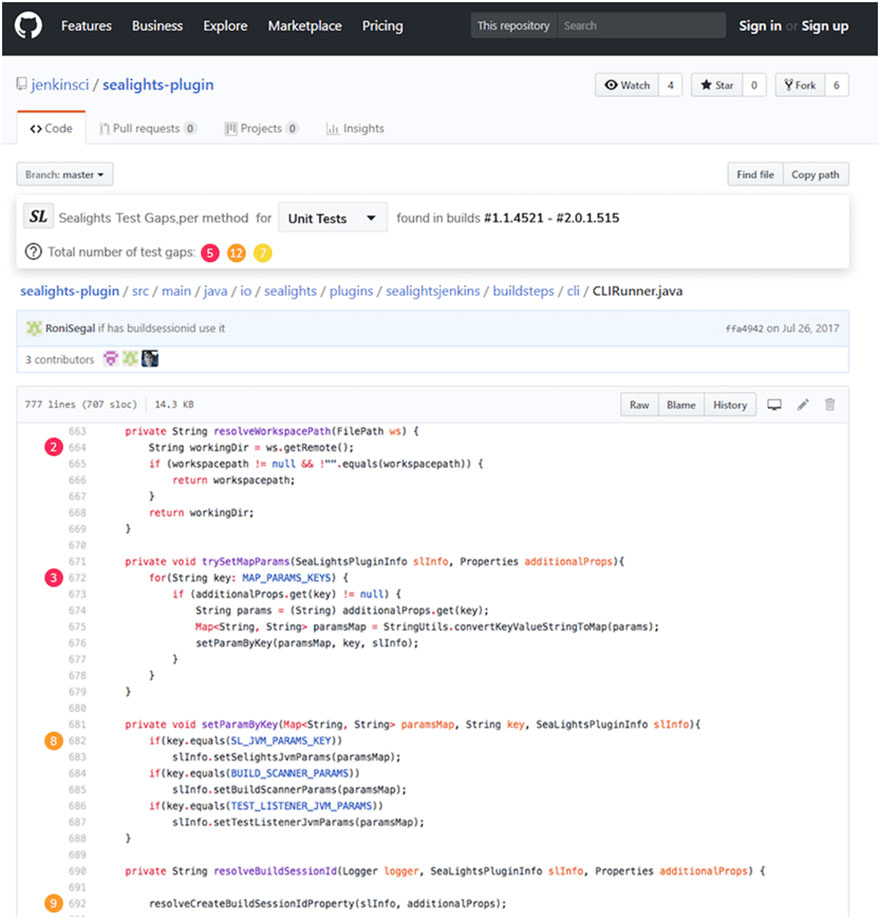

For easier access, Developers and QA engineers can also see this information directly in their source control, per each file they are interested of:

With the insight shared in these views both developers and QA engineers can plan their work based on analysis of data gathered from multiple sources across the software development pipeline and take the right decisions of where their next tests should be developed and how to put to rest the days of writing unneeded tests.