As a development manager, you understand the importance of testing in ensuring the quality and reliability of your software. However, you may also be aware of the challenges that come with troubleshooting failed tests. Not only do your developers have to identify the root cause of the issue, but they also have to fix it and make sure that it doesn’t happen again. This can be a particularly frustrating experience for your team when the failed test is not related to their code changes, but rather something like a flaky test, dependent test, configuration issue, or external factors like an API or third-party library.

This is a significant problem as it has been found that on average, development teams spend 20-30% of their time troubleshooting failed tests, which takes away valuable time and resources from driving innovation and progress. Even more concerning is that over 70% of tests fail for reasons unrelated to code changes, meaning a significant portion of this troubleshooting time is wasted on issues that could potentially be avoided.

But what if there was a way to reduce the time you spend troubleshooting failed tests that are unrelated to your code changes? That’s where Test Impact Analytics comes in.

Test Impact Analytics is a tool that analyzes your code changes and identifies which tests are related to those changes.

This means that you can focus on troubleshooting only the tests that are relevant to your code changes, rather than wasting time on unrelated failed tests. In fact, focusing on relevant tests can reduce the overall time spent troubleshooting failed tests by up to 60%.

Test Impact Analytics eliminates irrelevant tests from execution, thus reducing the amount of time needed to troubleshoot irrelevant failed tests

The benefits of Test Impact Analytics don’t stop there. By focusing only on code changes-related tests, you’ll also have a higher rate of success in fixing issues, with between 80%-100% of code changes-related failed tests being successfully resolved. And because you’re only troubleshooting the tests that are related to your code changes, you’ll be able to focus on the tasks that are most important and have the greatest impact, rather than getting bogged down.

Not only is troubleshooting failed tests that are unrelated to your code changes a time-consuming and frustrating task, but it can also lead to a lack of focus and productivity. When you’re constantly switching between tasks and context switching, it can be difficult to stay focused and maintain a high level of productivity.

The cost of context switching can be significant, with the average developer losing up to 15 minutes of productivity for each switch. This can add up quickly, especially for teams that are constantly switching between tasks or troubleshooting failed tests that are unrelated to their code changes. In addition to the time lost, context switching can also lead to a lack of focus and overall decreased productivity. By using Test Impact Analytics to focus on only the relevant tests related to code changes, teams can minimize context switching and improve their overall productivity and efficiency.

That’s where Test Impact Analytics comes in. By analyzing your code changes and identifying which tests are related to those changes, Test Impact Analytics helps you focus on the tasks that are most important and have the greatest impact. This means that you can spend less time troubleshooting failed tests that are unrelated to your code changes, and more time on the tasks that will drive innovation and progress.

The process of troubleshooting failed tests becomes much more focused, efficient, and productive for developers.

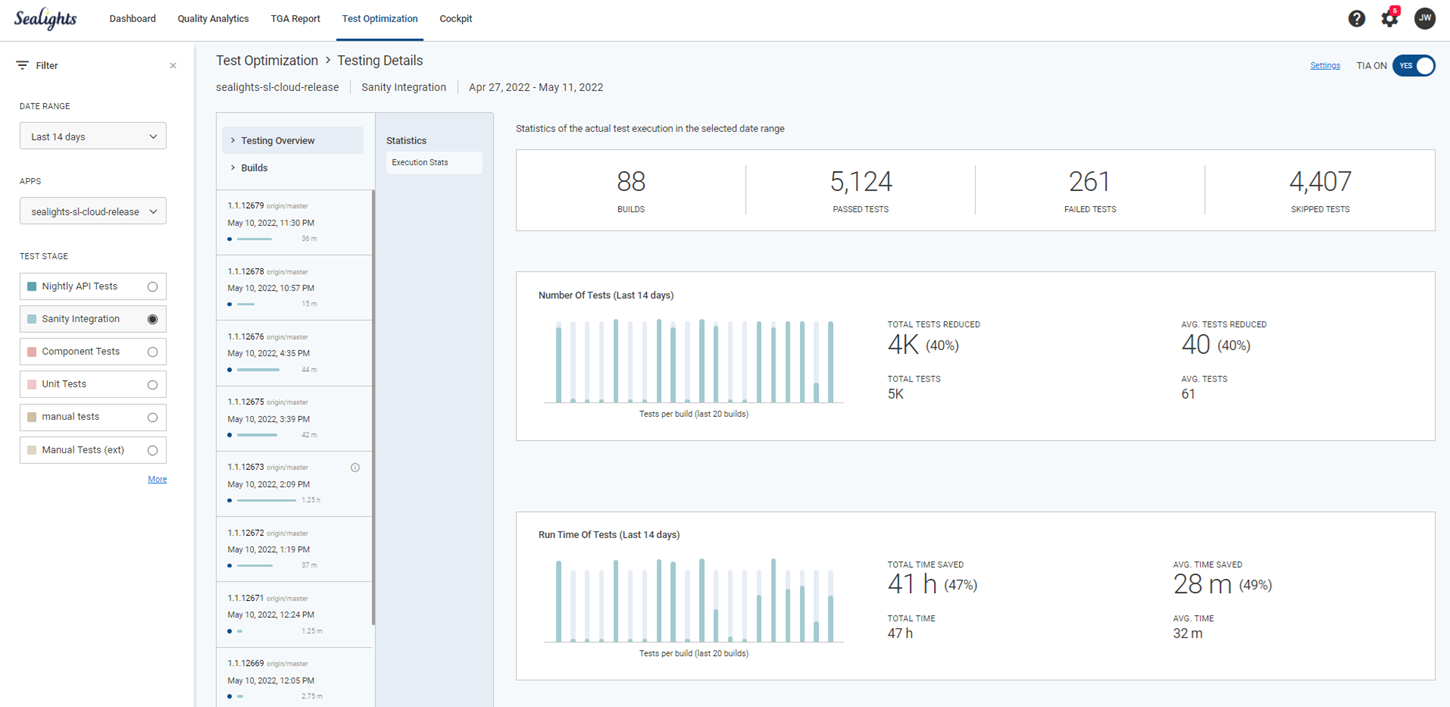

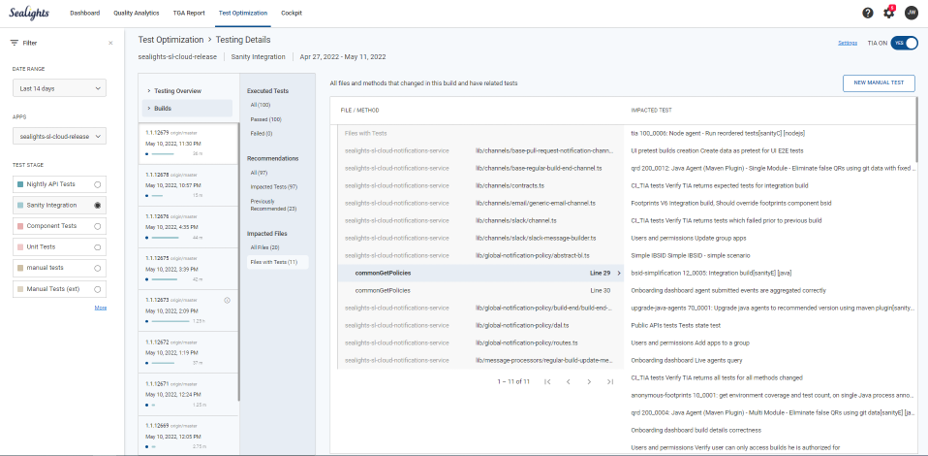

So how does Test Impact Analytics work? Essentially, it analyzes your historical builds and matches each test with the methods it covers. It then detects code changes in the latest build and identifies which tests are impacted by those changes. The list of impacted tests is available in the dashboard (and also via an API) and can be fed back into the test framework to execute only those impacted tests. Alternatively, the impacted test list can also be fed back to the customer’s CI. By using Test Impact Analytics, teams can run only the relevant tests related to code changes, saving time and improving efficiency.

Overall, Test Impact Analytics is a powerful tool that can help teams improve productivity and efficiency by minimizing the time spent troubleshooting failed tests that are unrelated to code changes. By focusing on the tasks that are most important and have the greatest impact, teams can drive innovation and progress and deliver high-quality software more efficiently.