What is Definition of Done?

The Definition of Done (DoD) in agile methodology is a list of criteria which must be met for a user story, sprint, or release to be considered “done.” Programmers are known for saying they are “done,” when in fact they have only completed the coding; there are additional stages to create a working product, such as testing, deployment and documentation. The agile Definition of Done should include these additional stages in its definition.

Agile management emphasizes the idea that every increment of work, or sprint, should create a potentially shippable product. The result of the sprint should be working software. A build may or may not actually be released to customers, but could be released depending on a decision by the product owner.

Ideally, the Definition of Done should capture all the essential things required for a work unit to be released for use by customers.

Definition of Done Examples

The following are Definition of Done templates typical to many development teams. A team may use these criteria—in part, in whole, or in combination with other criteria – as their Definition of Done.

| Definition of Done Checklist for a User Story | Definition of Done Checklist for a Sprint | Definition of Done Checklist for a Release |

|---|---|---|

|

|

|

Dangers of a Weak Definition of Done: Risk and Delay

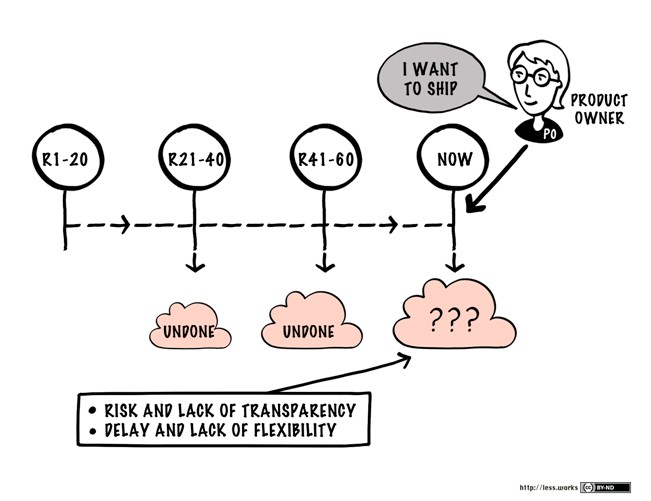

In agile projects the Definition of Done is fluid and left to the discretion of development teams. What happens when the Definition of Done is too “weak”? What happens when some criteria, which are important to achieve a potentially shippable product, are omitted and work is considered “done” even without them?

The most dangerous aspect of a weak definition of done is a false sense of progress. Teams report completing user stories, epics and releases, but in fact the quality and completeness of those work units is not fully known. When a product owner decides to ship, they might suddenly discover that certain parts of the product are not working or not ready for production.

The bottom line is that a weak Definition of Done leads to risk, and risk can lead to delays, or worse – production faults and customer dissatisfaction from the shipped product.

Definition of Done – Finding the Sweet Spot

A weak Definition of Done is bad, but an overly strong Definition of Done is just as bad. If the team is excessively investing in tests, verifications, and safeguards, they will not deliver on time and velocity will suffer.

The main “grey area” around the Definition of Done is quality. User stories are usually well defined and it’s clear what needs to be developed, but at what level does the product need to be tested to ensure customers are happy?

There are always more tests and test levels you can add, some of which are extremely complex and expensive. Where is the sweet spot—the place at which you can say “we’re done” and feel that you’ve hit the right level of quality that will make the vast majority of users happy?

Identifying Critical Missing Tests

To find that sweet spot, you first need to discover which critical tests are missing from your build. Which features are:

- New in this or recent builds and have no tests?

- Used very frequently in production?

- Missing an essential type of test (for example UI automation for a UI feature)?

These are the first tests you should add to your sprint to ensure you are really “done.”

Identifying Unnecessary Tests – Not Really Required for “Done”

Conversely, which features are:

- Unchanged in recent builds and work well in production?

- Rarely or never used in production?

- Already comprehensively tested?

Features meeting one or more of these criteria do not need to be tested for your project to be considered “done,” because they do not represent a major risk of production faults which will affect users.

By combining these two aspects – identifying critical tests and adding them to the sprint plan, and removing unnecessary tests from the sprint plan—you can hit your sweet spot. Define exactly the work that needs to be done to ensure an adequate level of quality.

Using Quality Intelligence to Achieve a Perfect Definition of Done

Quality Intelligence Software is a new category of tools which can help you fine-tune your quality efforts, to reach a perfectly balanced Definition of Done. Quality platforms can:

- Collect data on quality – Identifying which features are covered by tests at all test levels (unit tests, UI tests, integration tests and end-to-end tests), and which features are most used in production.

- Identify quality risks – Aggregating data from all test frameworks and recommending where to add tests that will have the biggest impact on quality.

- Identify wasted tests – Recommending testing activity that is redundant and does not need to be included in the Definition of Done, because it covers features that haven’t recently changed or are not actively used.

SeaLights is a leading Quality Intelligence platform for software development teams, providing actionable insight based on data analytics from across the development pipeline. For more practical advice on how to improve developer productivity and increase velocity with Quality Intelligence, learn more about Quality Intelligence.