Quality Risk Intelligence.

Leverage the power of AI/ML to detect and resolve quality risks as soon as they are introduced to your pipeline.

Quality Risk Intelligence.

Leverage the power of AI/ML to detect and resolve quality risks as soon as they are introduced to your pipeline.

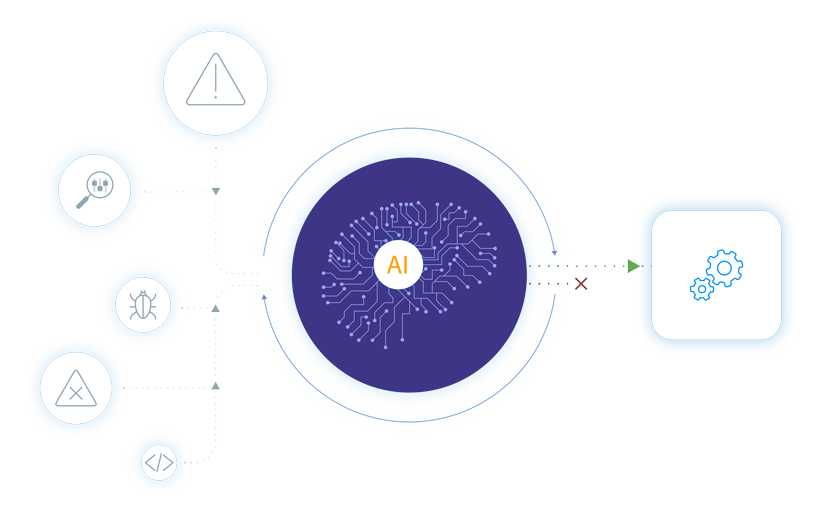

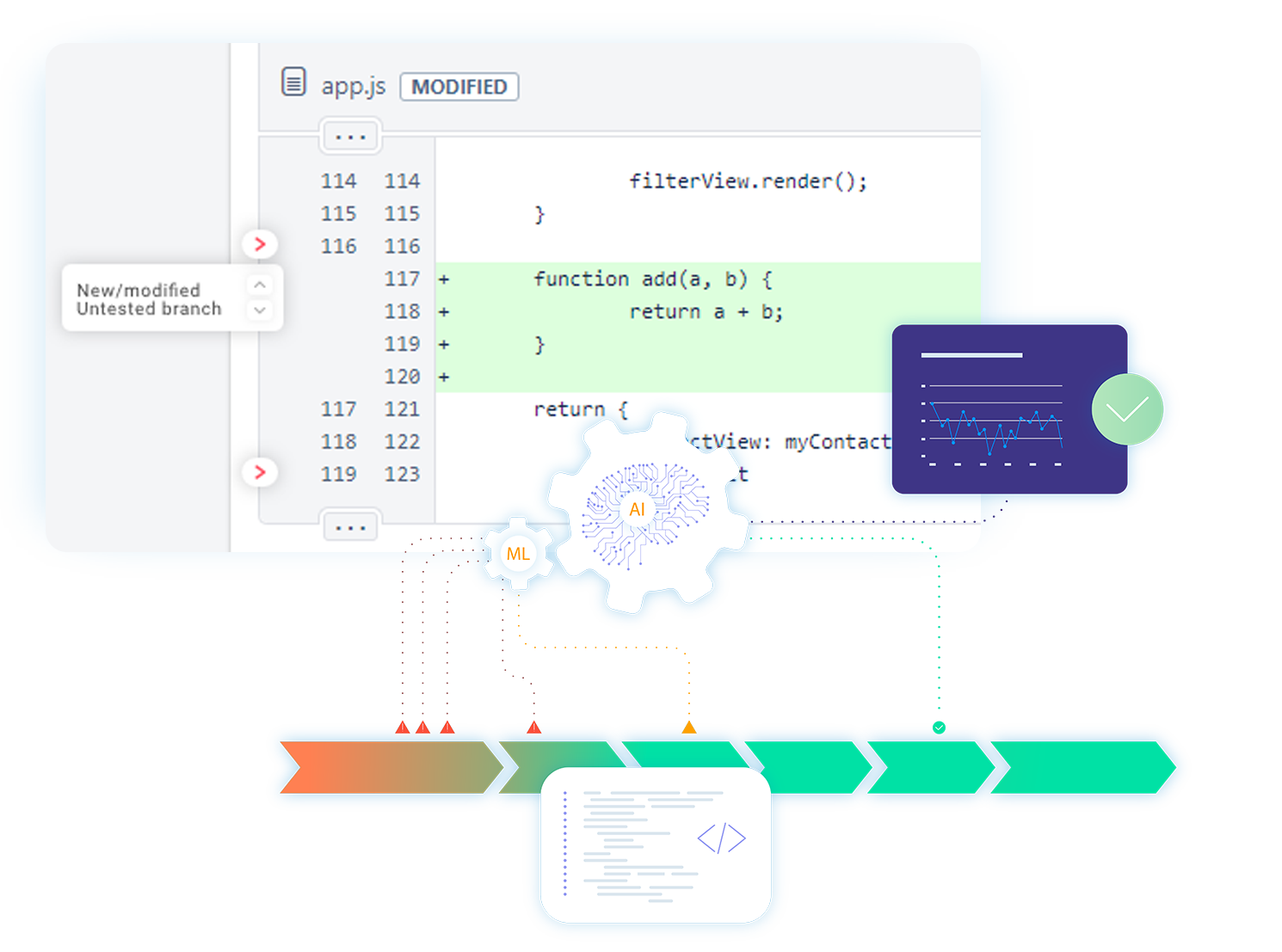

Analyzing quality risks in real-time.

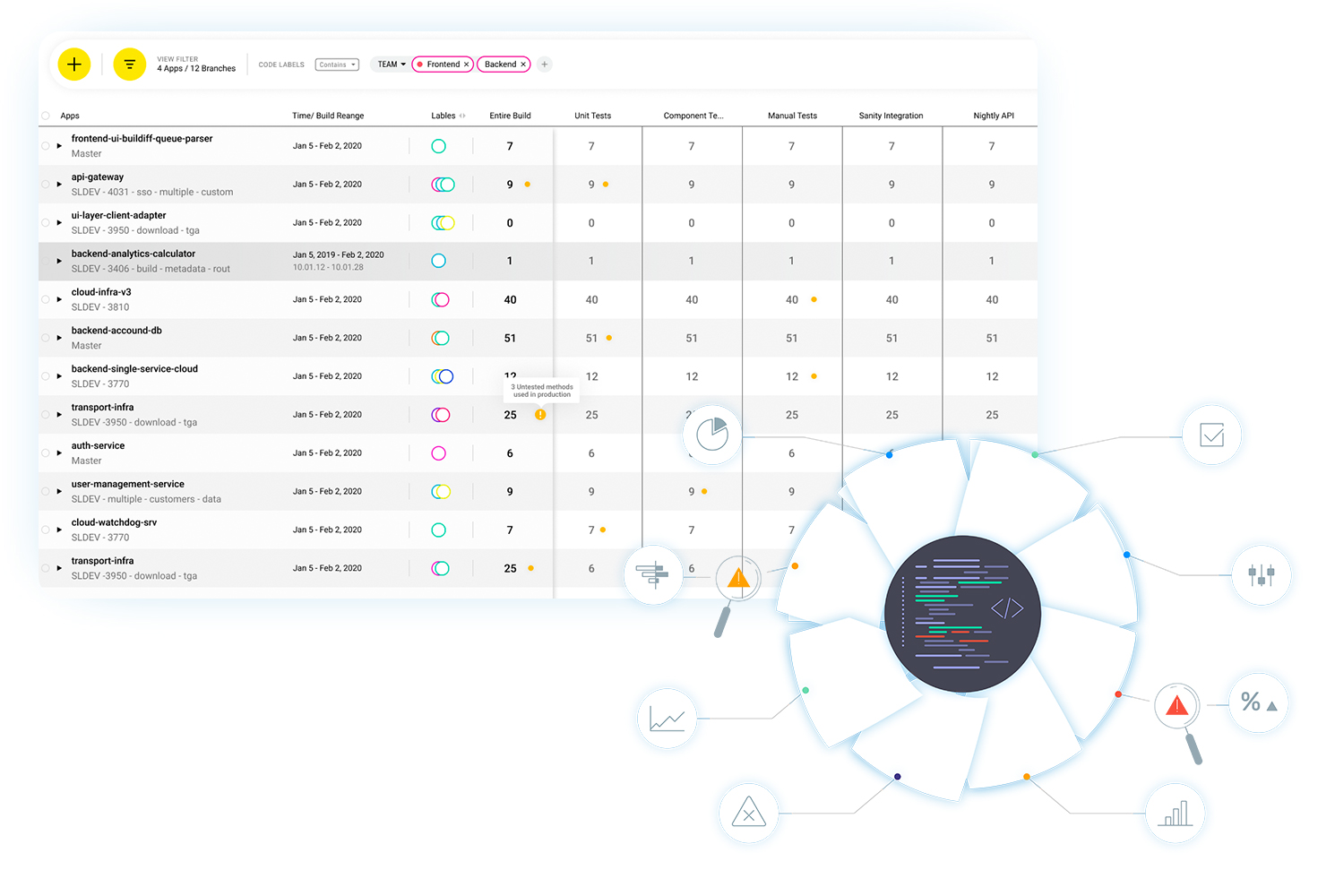

SeaLights Quality Intelligence ingests millions of data points and analyzes them in real-time using AI/ML algorithms. It analyzes each build and identifies quality risks—critical components that affect users but are not sufficiently tested.

Teams can view these quality risks at all stages of the SDLC. For example, SeaLights provides a quality risk report within a pull request approval, letting the approver know the potential impact on the pipeline. This allows organizations to shift left and detect quality risks early, before they turn into defects in staging or production environments.

Analyzing quality risks in real-time.

SeaLights Quality Intelligence ingests millions of data points and analyzes them in real-time using AI/ML algorithms. It analyzes each build and identifies quality risks—critical components that affect users but are not sufficiently tested.

Teams can view these quality risks at all stages of the SDLC. For example, SeaLights provides a quality risk report within a pull request approval, letting the approver know the potential impact on the pipeline. This allows organizations to shift left and detect quality risks early, before they turn into defects in staging or production environments.

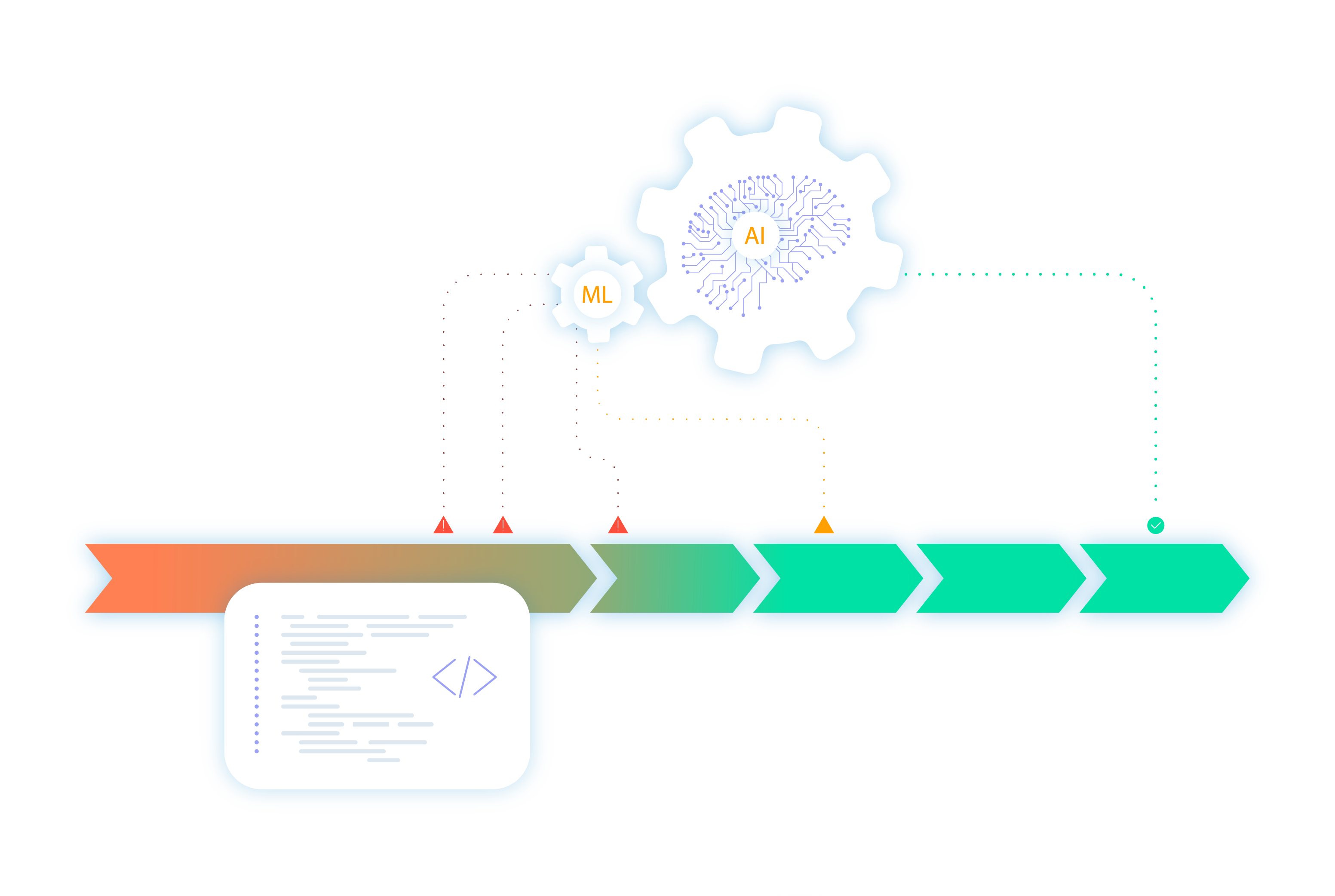

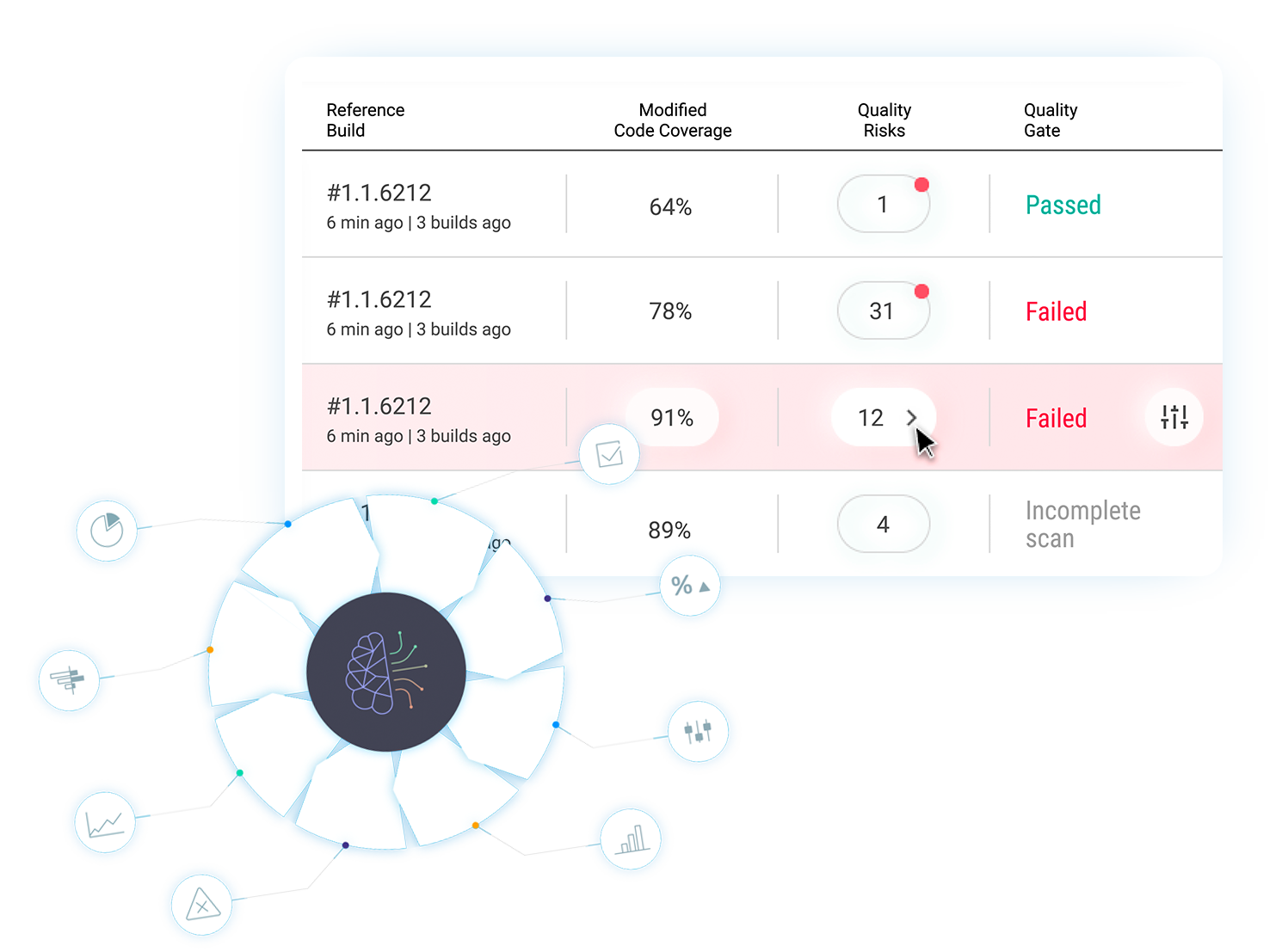

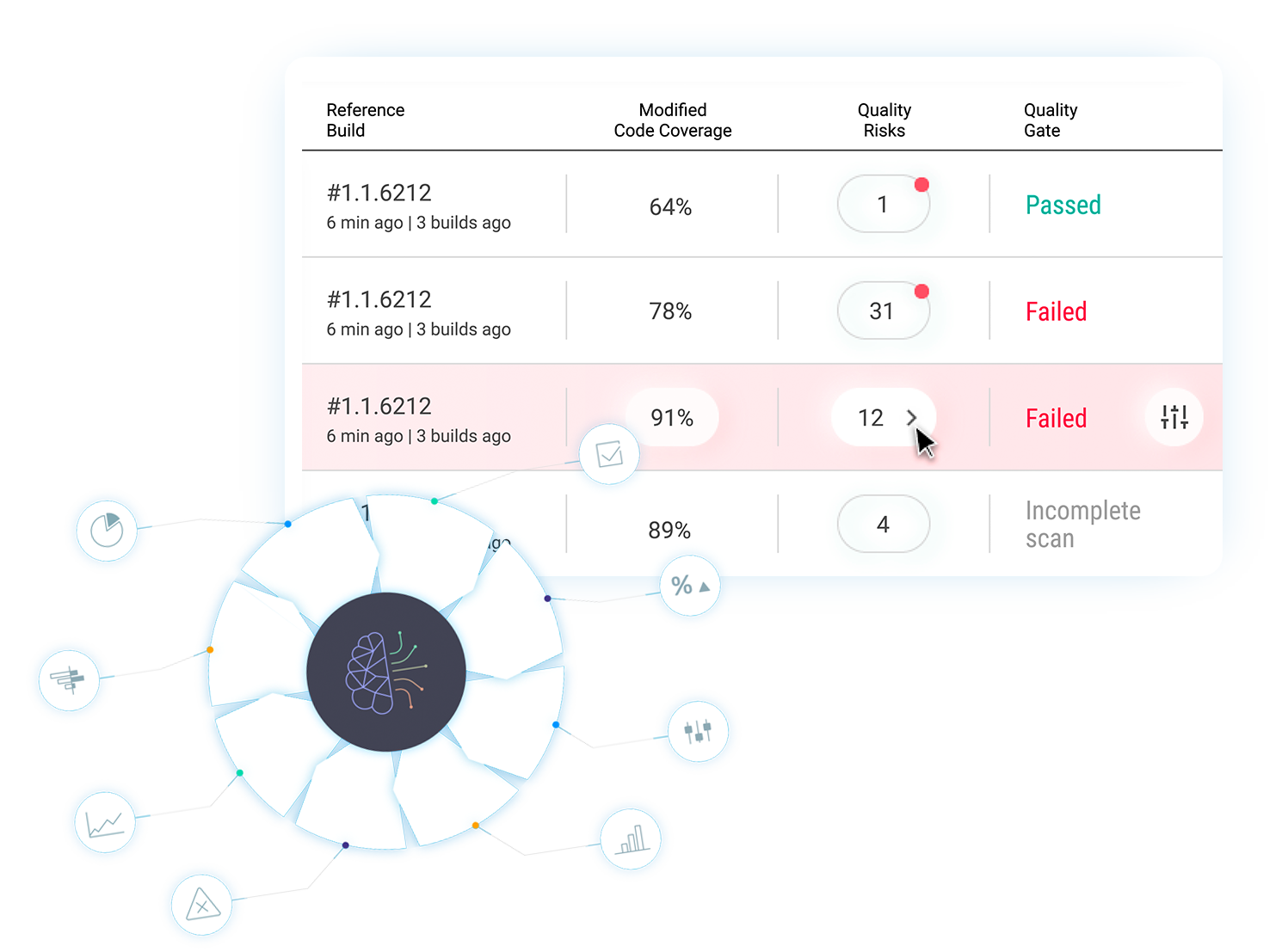

Risk analysis of every change in every build.

Modern development teams run numerous builds throughout the day. Some of these builds might be good candidates for promotion to testing and production, and some might have severe quality issues. It’s impossible to manually analyze every build and discover all quality issues.

SeaLights Quality Intelligence leverages big data and the power of AI/ML to analyze every change in every build, giving teams clear metrics about quality risks. When a build introduces a high-quality risk, engineering teams automatically receive feedback and can immediately resolve the problem. With SeaLights, high-quality builds can be confidently promoted in the pipeline.

Risk analysis of every change in every build.

Modern development teams run numerous builds throughout the day. Some of these builds might be good candidates for promotion to testing and production, and some might have severe quality issues. It’s impossible to manually analyze every build and discover all quality issues.

SeaLights Quality Intelligence leverages big data and the power of AI/ML to analyze every change in every build, giving teams clear metrics about quality risks. When a build introduces a high-quality risk, engineering teams automatically receive feedback and can immediately resolve the problem. With SeaLights, high-quality builds can be confidently promoted in the pipeline.

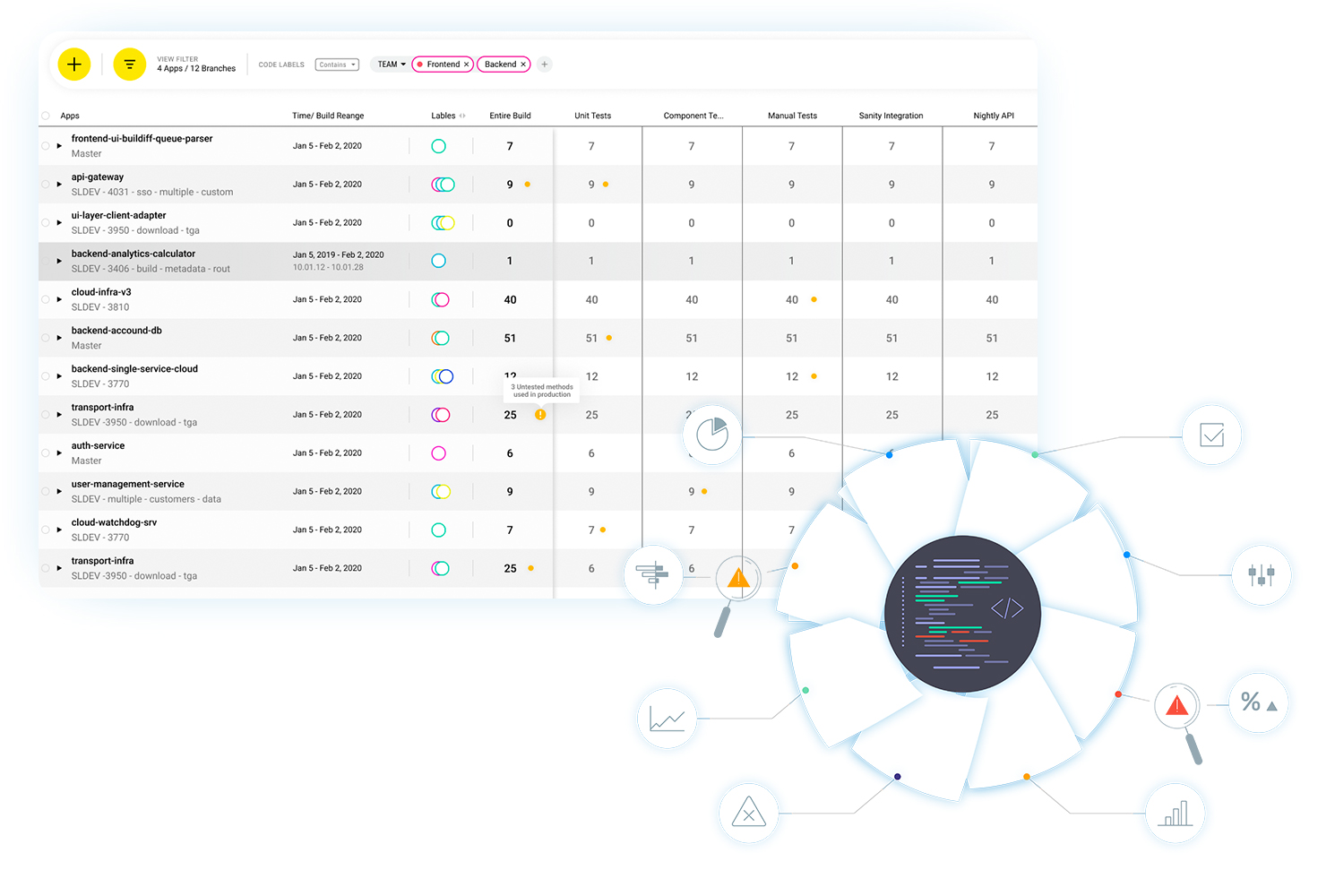

Improve Organizational Visibility of Software Quality Metrics

SeaLights Software Quality Intelligence is the only platform that can identify quality risks as soon as they are introduced into the software pipeline—whether in the planning, development, testing or staging phase. SeaLights provides meaningful metrics that make quality transparent for every stakeholder in the organization.

Improve Organizational Visibility of Software Quality Metrics

SeaLights Software Quality Intelligence is the only platform that can identify quality risks as soon as they are introduced into the software pipeline—whether in the planning, development, testing or staging phase.

SeaLights provides meaningful metrics that make quality transparent for every stakeholder in the organization.

Test Quality Intelligence.

Reduce wasted testing efforts. Understand which tests are actually needed.

Not all test gaps represent the same level of risk and therefore don’t need to have the same level of test coverage. This classification allows teams to focus each sprint’s test-building activities on those which will eliminate the defects that might otherwise affect the most users in production actions, and not waste time building unnecessary tests.

Test Quality Intelligence.

Reduce wasted testing efforts. Understand which tests are actually needed.

Not all test gaps represent the same level of risk and therefore don’t need to have the same level of test coverage. This classification allows teams to focus each sprint’s test-building activities on those which will eliminate the defects that might otherwise affect the most users in production actions, and not waste time building unnecessary tests.

“What we really like about SeaLights Software Quality Intelligence is that we can quickly see our Quality Risks and easily understand what our tests are not covering as our code base changes over time.”

Software Quality Manager, A Global Leading Media Company

“What we really like about SeaLights Software Quality Intelligence is that we can quickly see our Quality Risks and easily understand what our tests are not covering as our code base changes over time.”

Software Quality Manager, A Global Leading Media Company

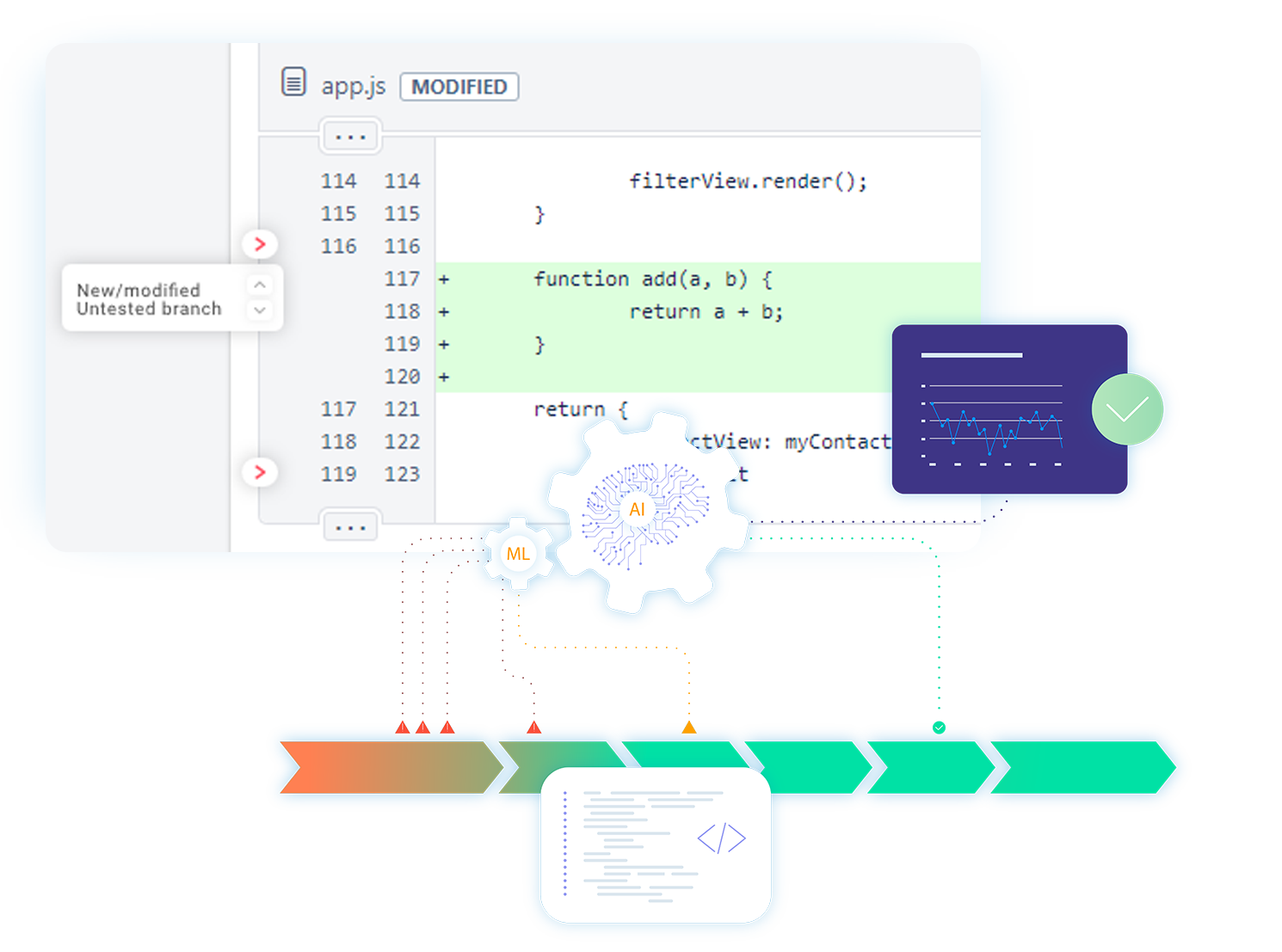

Identify test gaps and prioritize testing activity.

Most engineering teams find it difficult to identify which parts of their product are not sufficiently tested. A large number of tests does not mean the relevant parts of the product are effectively tested.

SeaLights Quality Intelligence analyzes test execution data and correlates it with changes to the codebase and actual production usage, identifying Test Gaps—critical product functionality that is not tested or is missing specific tests (for example, unit tests or functional tests).

Teams can use Test Gaps to prioritize their investment in testing, and create only the tests that are actually needed, when they are needed.

Identify test gaps and prioritize testing activity.

Most engineering teams find it difficult to identify which parts of their product are not sufficiently tested. A large number of tests does not mean the relevant parts of the product are effectively tested.

SeaLights Quality Intelligence analyzes test execution data and correlates it with changes to the codebase and actual production usage, identifying Test Gaps—critical product functionality that is not tested or is missing specific tests (for example, unit tests or functional tests).

Teams can use Test Gaps to prioritize their investment in testing, and create only the tests that are actually needed, when they are needed.

Visibility into the effectiveness of tests.

Development and QA teams invest a lot of time and money in building tests, and large sets of tests can take a long time to run, slowing down builds. Normally it’s not possible to understand which tests are actually relevant to a specific software change or set of changes and need to be executed. This means the entire set of tests has to be run for every change.

SeaLights Quality Intelligence gives teams complete visibility into the which tests are required for each code change. They can discover:

- Which existing tests are not needed for a particular change — “too many tests” — and skip them to save testing time

- Which tests are missing for critical parts of the product

Visibility into the effectiveness of tests.

Development and QA teams invest a lot of time and money in building tests, and large sets of tests can take a long time to run, slowing down builds. Normally it’s not possible to understand which tests are actually relevant to a specific software change or set of changes and need to be executed. This means the entire set of tests has to be run for every change.

SeaLights Quality Intelligence gives teams complete visibility into the which tests are required for each code change. They can discover:

- Which existing tests are not needed for a particular change — “too many tests” — and skip them to save testing time

- Which tests are missing for critical parts of the product

Provide End to End Traceability

Customized for Any Tool and Framework

We Care About Your Data

Use a fully secured platform that protects your privacy.

We never access the source code, and your data stays encrypted, always.

We Care About Your Data

Use a fully secured platform that protects your privacy.

We never access the source code, and your data stays encrypted, always.